Docker

From Wikipedia -

“Docker is a set of platform as a service products that use OS-level virtualization to deliver software in packages called containers”

In simple words -

it simplifies the process of creating, deploying, and running applications by creating containers. Think of containers as lightweight, portable boxes that contain everything an application needs to run, including code, libraries and system tools.

Key Concepts in Docker

Containers Containers are **isolated environments **that package an application and its dependencies together. They ensure that the application runs consistently across different computing environments, from a developer’s laptop to a production server.

Images Docker images are the blueprints for containers. They are read-only templates that contain the application code, runtime, libraries, and dependencies needed to run an application.

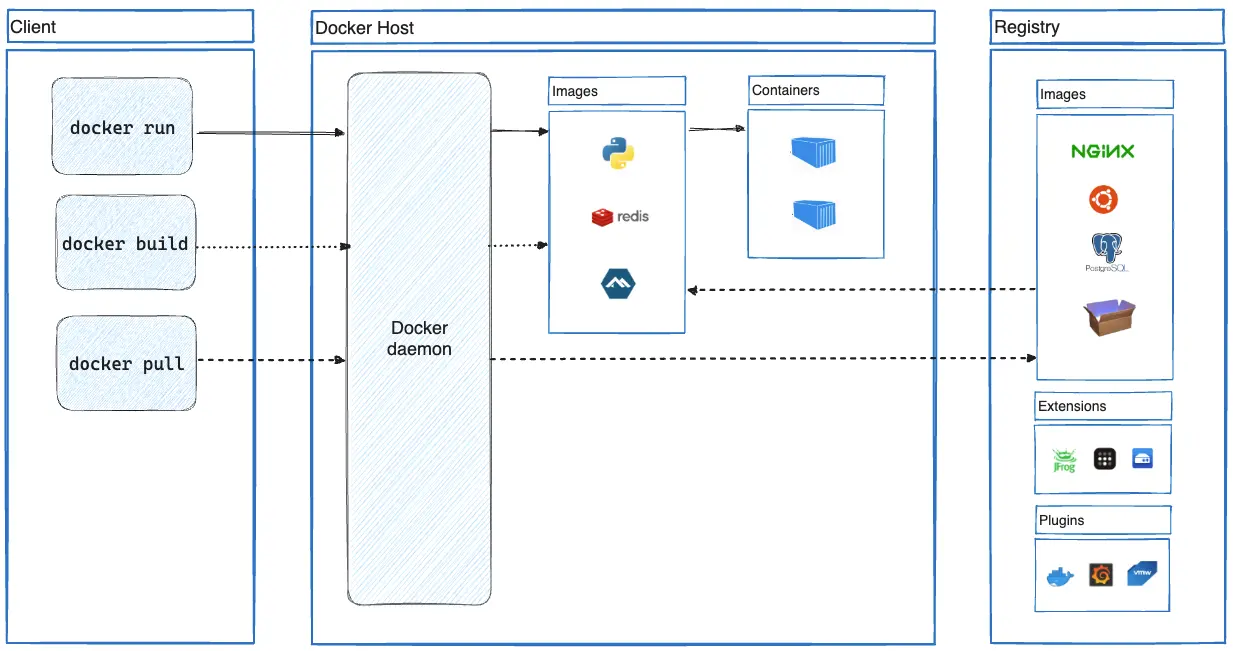

Docker Engine This is the core technology that runs and manages containers on your machine. It acts as a client-server application, handling the building and running of containers.

Benefits of Docker

- Consistency: Applications run the same way in development, testing, and production environments.

- Portability: Containers can run on any system that supports Docker, regardless of the underlying operating system.

- Efficiency: Containers share the host system’s kernel, making them more lightweight than traditional virtual machines.

- Scalability: Docker makes it easy to scale applications up or down quickly by adding or removing containers.

How Docker Works?

- Developers create a Dockerfile, which specifies the application and its dependencies.

- The Dockerfile is used to build a Docker image.

- The image can be run to create a container, which executes the application.

- Containers can be easily shared, deployed, and managed across different environments.

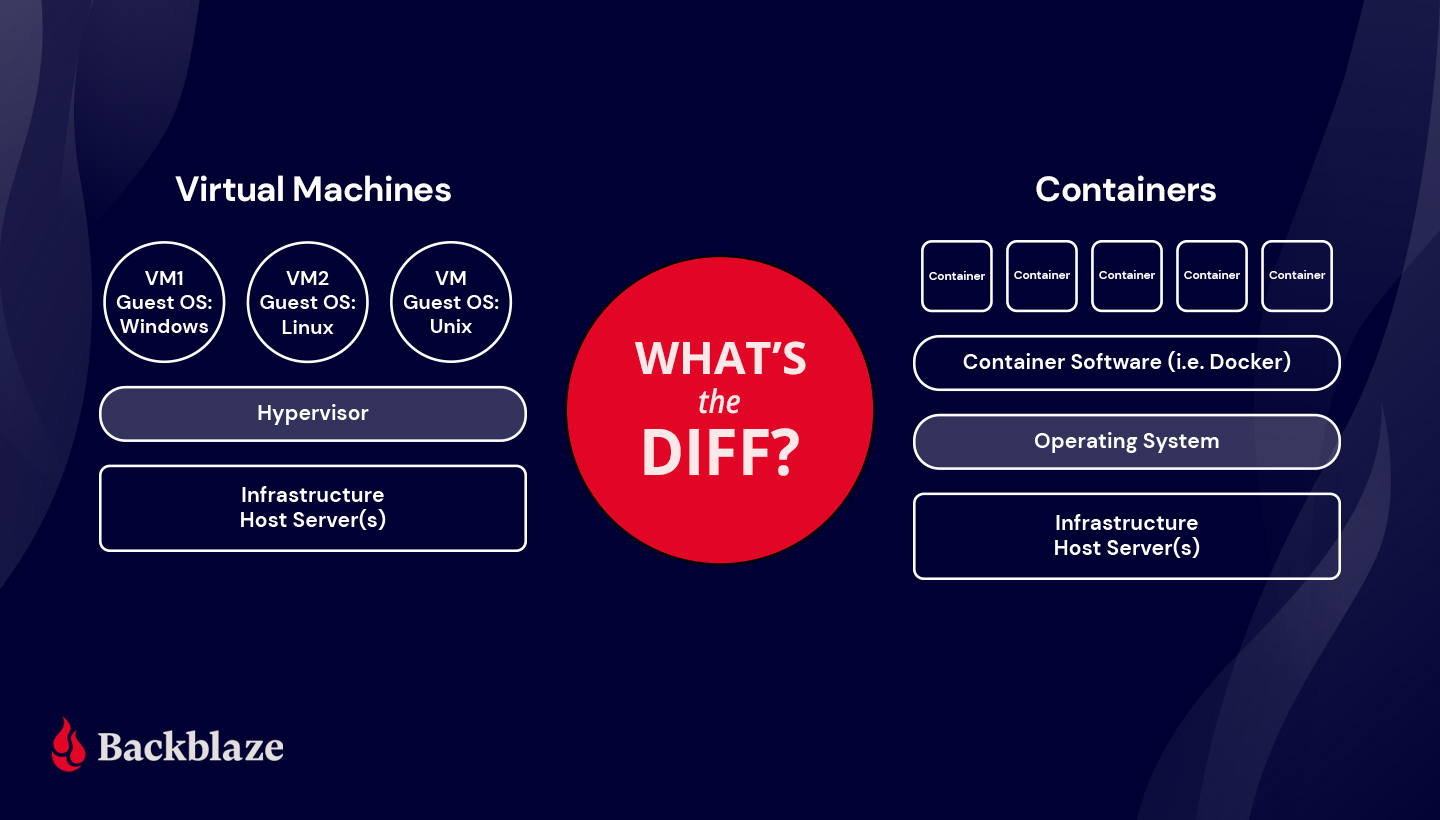

How is it different from Virtual Machine?

| Docker | Virtual Machine | |

|---|---|---|

| Architecture | Uses containerization technology | Run a complete guest operating system on top of hypervisor |

| Shares the host operating system kernel | Fully virtualize the OS kernel and applicaiton | |

| Resource Utilization | Lightweight and efficient | More resource intensive |

| Uses resources on demand | Require full allocation of physical hardware resources | |

| Shares the host OS, leading to lower system resource consumption | Each VM runs a seperate OS, consuming more CPU, RAM and storage | |

| Performance and Speed | Faster startup time (in millisec) | Longer startup times (minutes) |

| Ideal for microservices and cloud-native applications | better suited for monolithic or legacy applications | |

| Isolation and Security | Provides process-level isolation | Offers stronger isolation due to complete OS seperation |

| Shares the host kernel, potentially increasing security risks | Each VM runs independently, enhancing security | |

| Use Cases | Ideal for microservices architecture | Better for scenarios requiring full OS isolation |

| Efficient for CI/CD pipelines and rapid development | Suitable for running applications on different OS | |

| Suitable for cloud native applications | Preferred for legacy applications or when strong isolation is necessary |

Purpose and Relevance to Data Engineering

- Purpose of Docker:

- Packages software into isolated containers.

- Ensures reproducibility across different environments (local, cloud, etc.).

- Enables running self-contained data pipelines and services (like Postgres or pgAdmin) without affecting the host system.

- Relevance for Data Engineers:

- Facilitates local experiments and integration tests.

- Supports reproducible environments for development and deployment.

- Helps manage dependencies (e.g., Python, Pandas) without cluttering the host system.

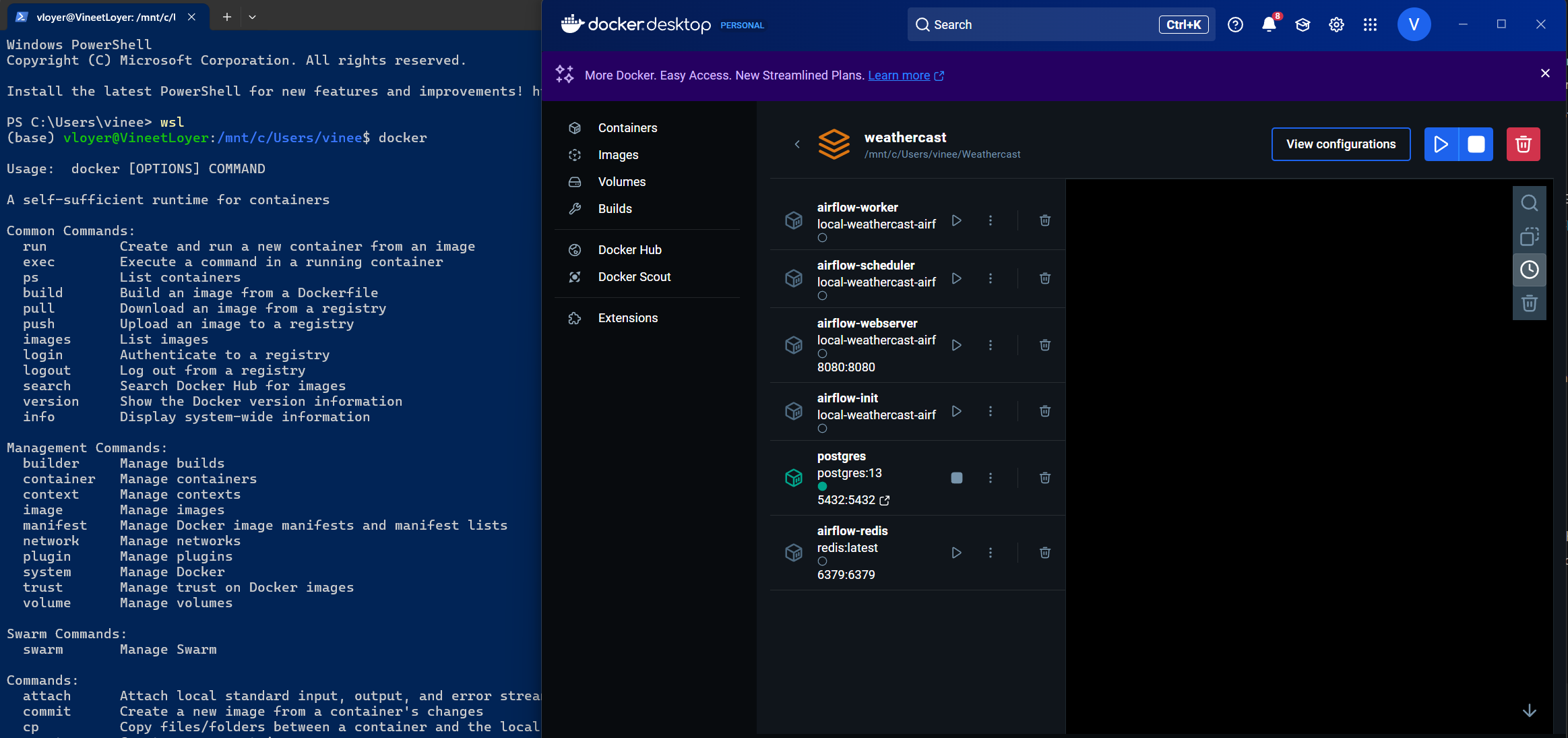

Getting Hands-On with Docker

Assuming, Docker and Docker desktop is successfully installed (for installation on Windows: https://docs.docker.com/desktop/setup/install/windows-install/ )

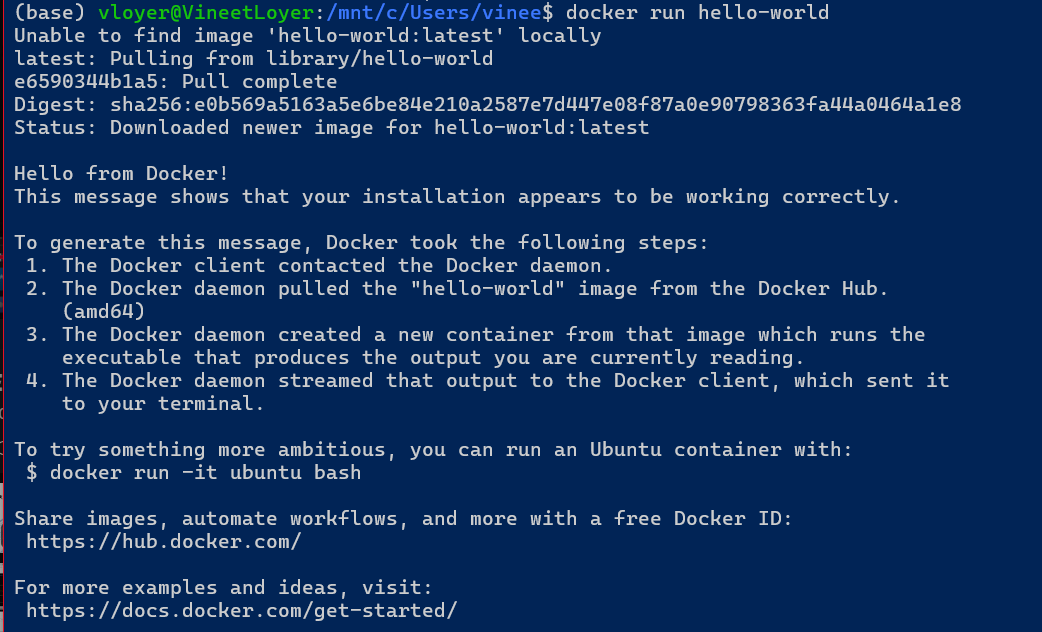

docker run hello-world

What will this do? Purpose: To Validates Docker installation and connectivity to Docker Hub.

Goes to Docker Hub, it is where docker keeps all the images (snapshots) , Docker will look for image hello-world and it will download this image and run this image.

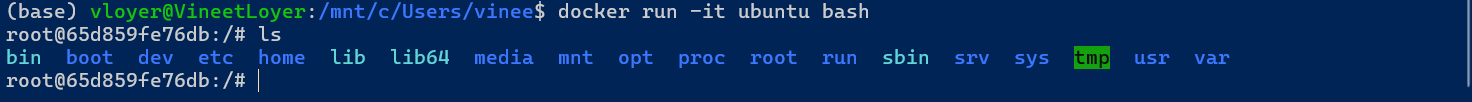

Running an interactive Ubuntu Container:

docker run ubuntu -it bash

Isolation - If we delete the content of the container (everything including the system commad by executing rm -rf / ) this does not affect the host pc at all, we can exit and execute docker run ubuntu -it bash again and docker will serve us a new container.

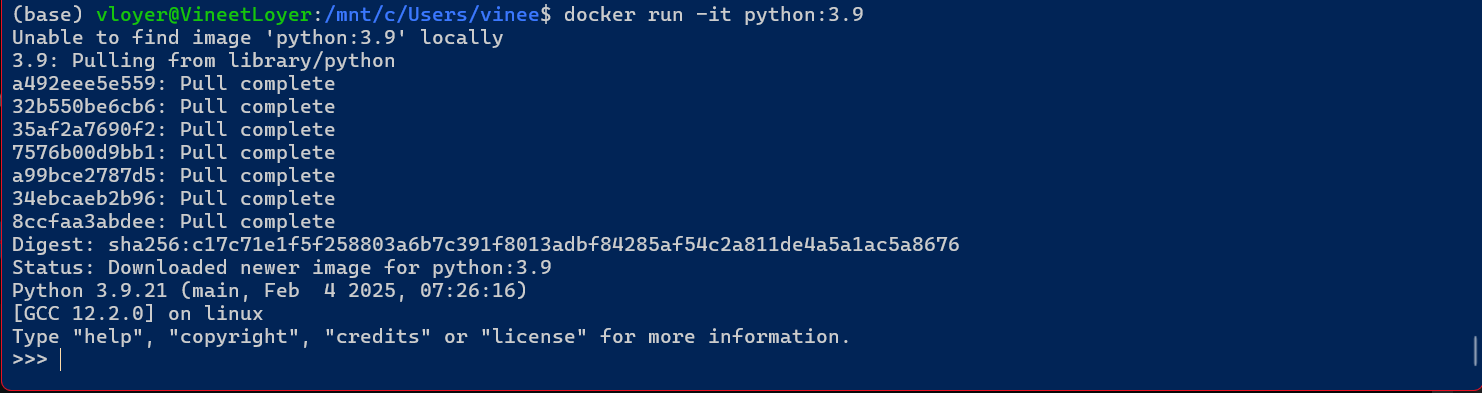

Running a Python Container and Installing Dependencies:

docker run python:3.9

This starts a container with Python 3.9, we can try installing pandas inside the container with

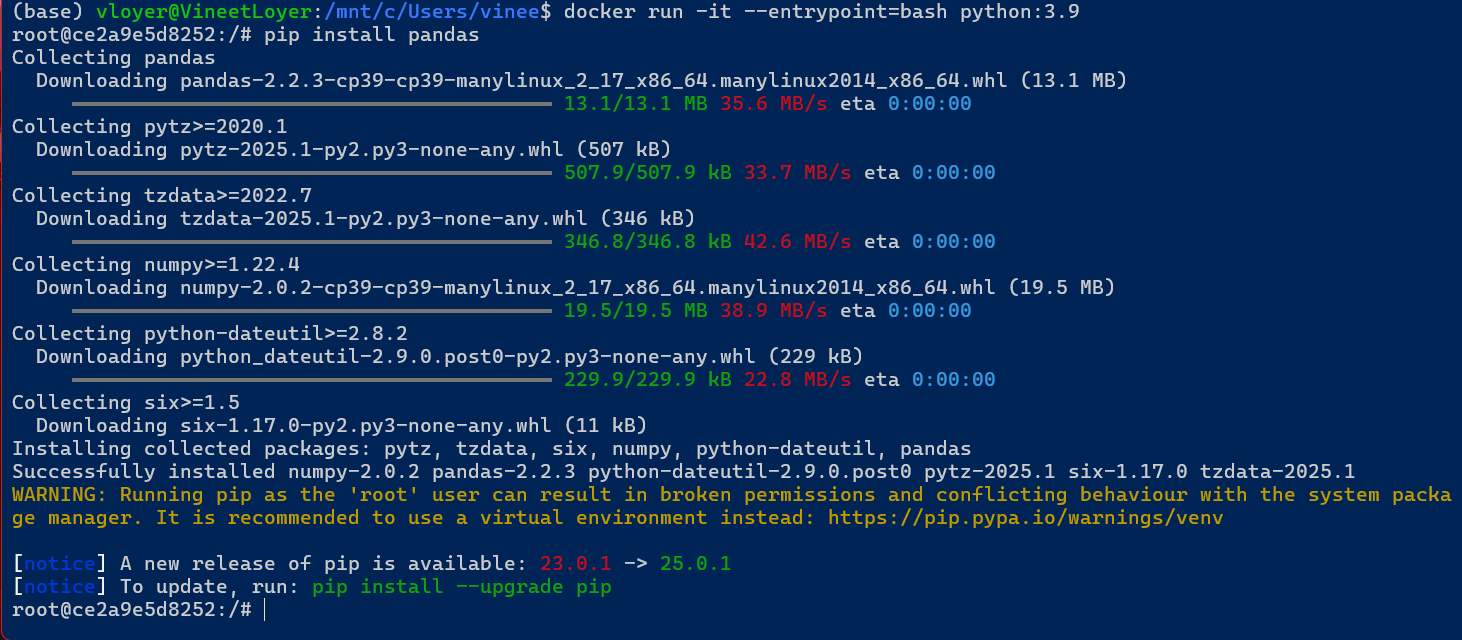

docker run -it --entrypoint=bash python:3.9

–entrypoint=bash allows to write bash commands rather than entring in python CLI

pip install pandas

Outcome: Installs Pandas temporarily; however, changes are lost once the container is stopped.

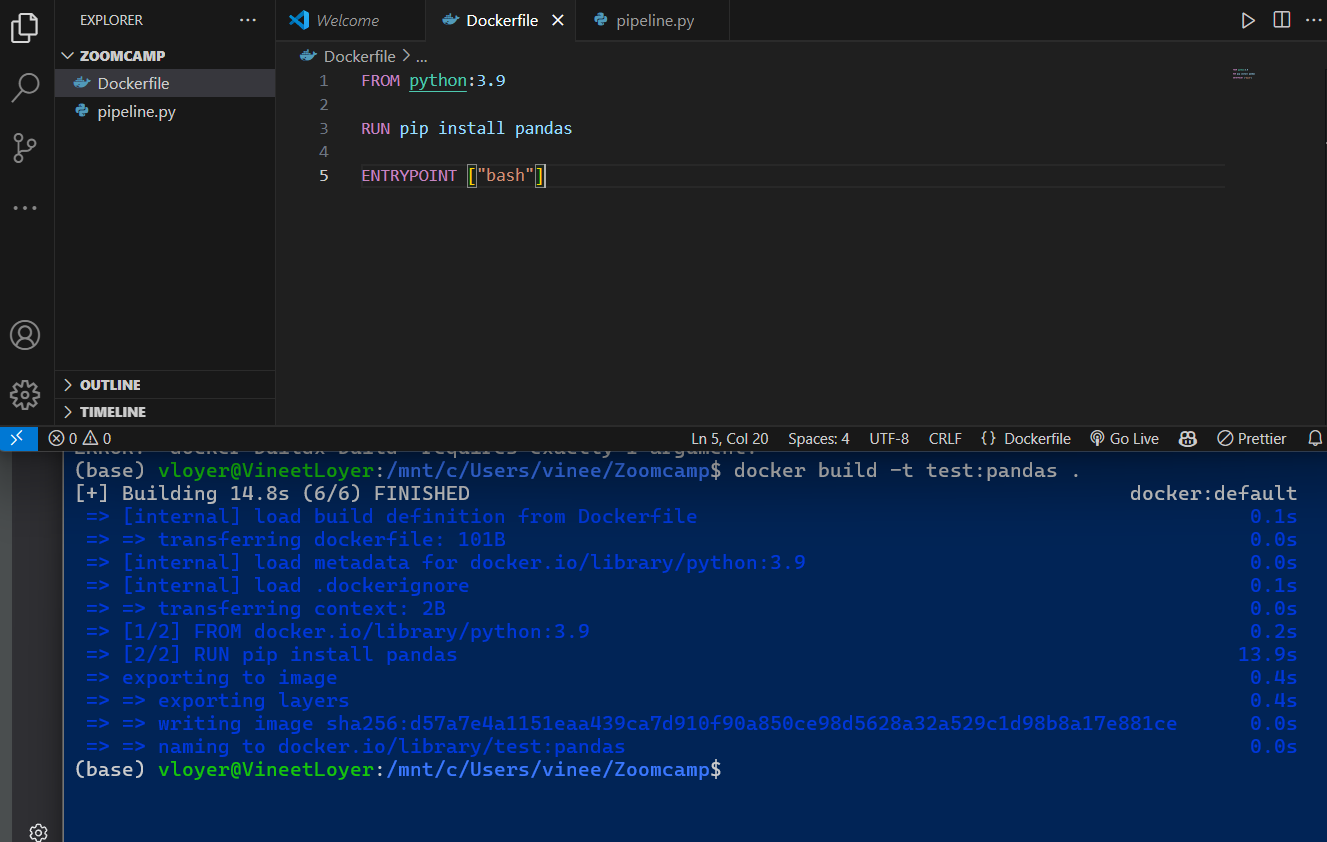

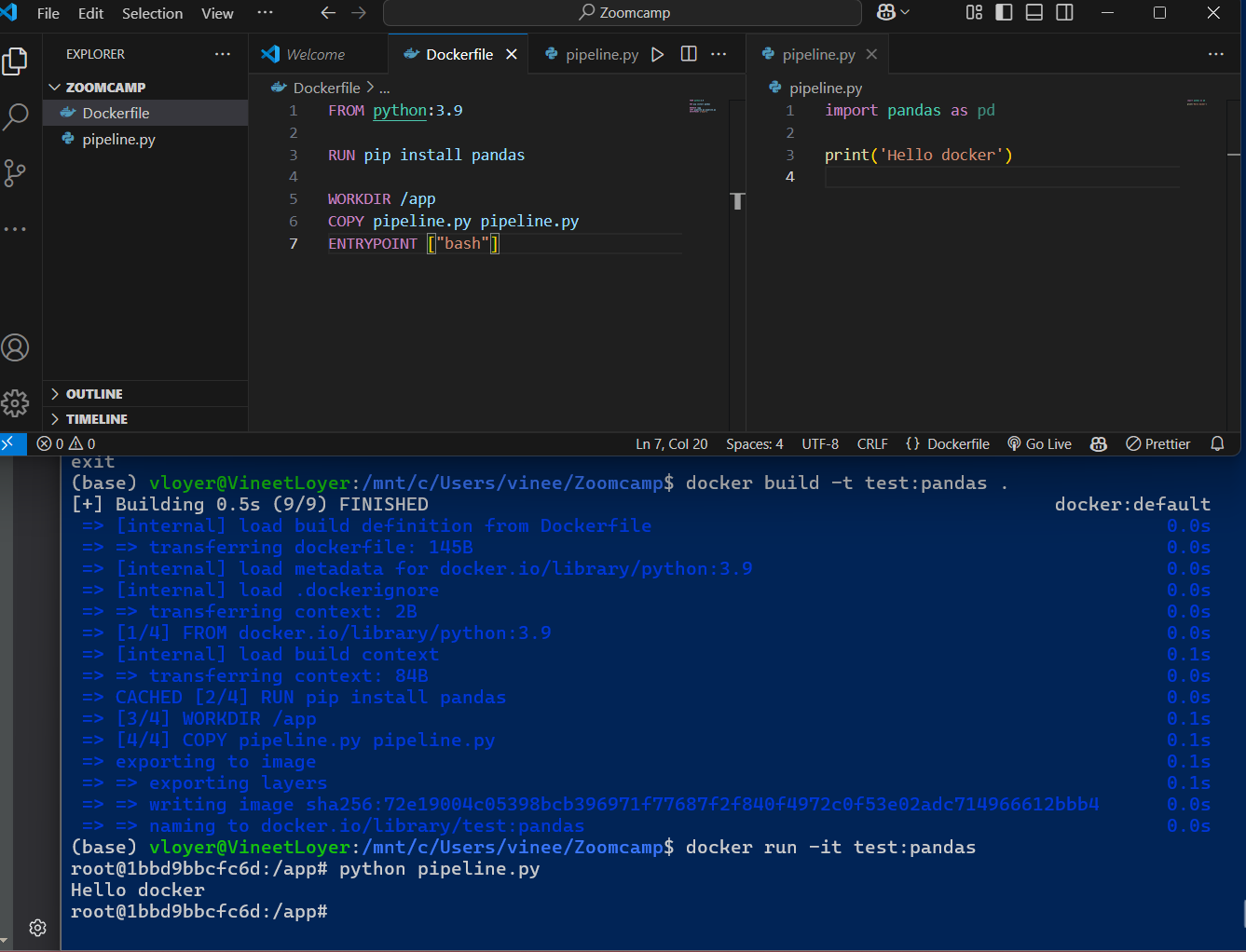

Creating a Docker Image Using a Dockerfile

A Dockerfile is a script containing a series of instructions used to create a Docker image. This image can be used to run a container that has all the dependencies and config required to run an application.

- Writing a Dockerfile

Base Image Specification

FROM python:3.9.1

This specifies that the container should be based onPython 3.9.1 .It ensures that Python is pre-installed in the container.

Any operations executed in the container will use this Python environment.

Installing Dependencies

RUN pip install pandas

The RUN command executes shell commands during the image build process.

pip install pandasinstalls the Pandas library so that it is available inside the container.- The installed packages become part of the image , so they persist across container runs.

Setting the Working Directory & Copying Files

WORKDIR /app

COPY pipeline.py /app/

WORKDIR /app- Sets

/appas the working directory inside the container. - Any subsequent commands will execute inside

/app.

- Sets

COPY pipeline.py /app/- Copies the

pipeline.pyscript from the host machine to/appinside the container. - This ensures the Python script is available for execution.

- Copies the

2. Defining the Entry Point

Initially, the entry point is set to a bash shell for interactive use, allowing manual execution inside the container. Later, it is overridden to run the data pipeline script automatically:

ENTRYPOINT ["python", "pipeline.py"]

- Defines the default command that runs when the container starts.

- Instead of launching a shell (

bash), it executes: python pipeline.py - This ensures that the data pipeline script runs automatically when the container is started.

-

Building Docker image

docker build -t test .

-

docker build→ Command to build a new Docker image. -

-t test→ Assigns the nametestto the image. -

.→ Specifies that the current directory contains the Dockerfile.

Outcome:

- A Docker image named

testis created. - This image contains:

- Python 3.9.1

- Pandas library

- The

pipeline.pyscript - A predefined entry point (

python pipeline.py)